Deep learning based distributed compression for efficient data transmission in multi-sensor networks

Reducing communication overload in multi-sensor networks is becoming more and more crucial in today’s connected world, where a powerful central server collects data from remote devices to run compute heavy algorithms. Continuous transmission of high-dimensional data is challenging because of the limited uplink bandwidth available. Hence design of efficient compression algorithms that address the following challenges is of vital importance.

- How do we optimize compression of high-dimensional data for the downstream algorithms?

- How do we handle the bandwidth distribution among multiple sources?

- How do we dynamically adjust the compression in order to prioritize the transmission of the most important features first?

In this work, we propose a deep learning based distributed compression framework to simultaneously address all of these challenges using a single model.

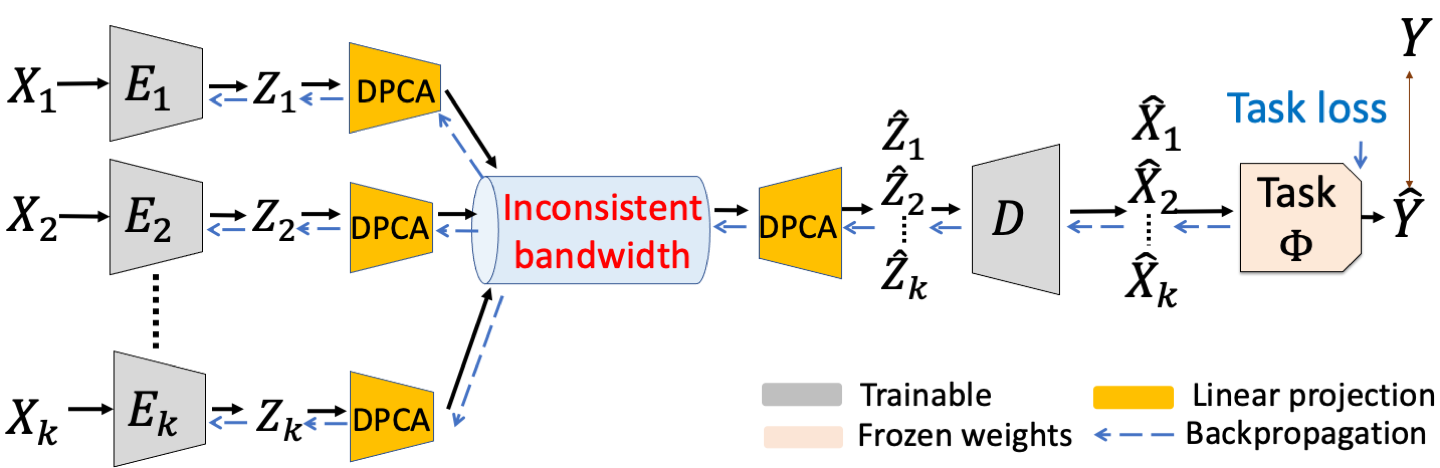

Figure 1: The NDPCA pipeline showing the flow of data from sensors to the central node and task model.

Problem Setup

In a distributed inference system, each sensor independently compresses its data before transmitting it to a central node, where a decoder reconstructs the data corresponding to all sensors. This reconstructed data is then fed into a task model, which is often a pre-trained machine-learning model, which produces the desired output. Because of the high-dimensional nature of the sensor data, the data is compressed at a rate heavily dependant on the available uplink bandwidth, which directly impacts the performance of the downstream task model. Hence it is important for the learned compression model to support multiple rates in order to maximize the task performance at the central node.

A practical example of this setting is computer vision applications on satellite imagery. A constellation of satellites can be used to continuously monitor a geographic location and the image and video data from all the satellites can be collected by a central processing server on the ground for collective inference. Note that each satellite contains only a partial information, in other words only it’s view, of the location and hence cannot run the inference optimally. Satellite links are typically of low bandwidth or low throughput and hence aggressive compression becomes a necessity.

Efficient distribution of bandwidth among sources

Given the final goal of the compression model is to maximize the performance of downstream task model, the importance of each source for the downstream task model need to be accounted for while allocating the bandwidth to each source. It order to automate this, we propose using a distributed version of principal component analysis (PCA), which we refer to as distributed PCA (DPCA).

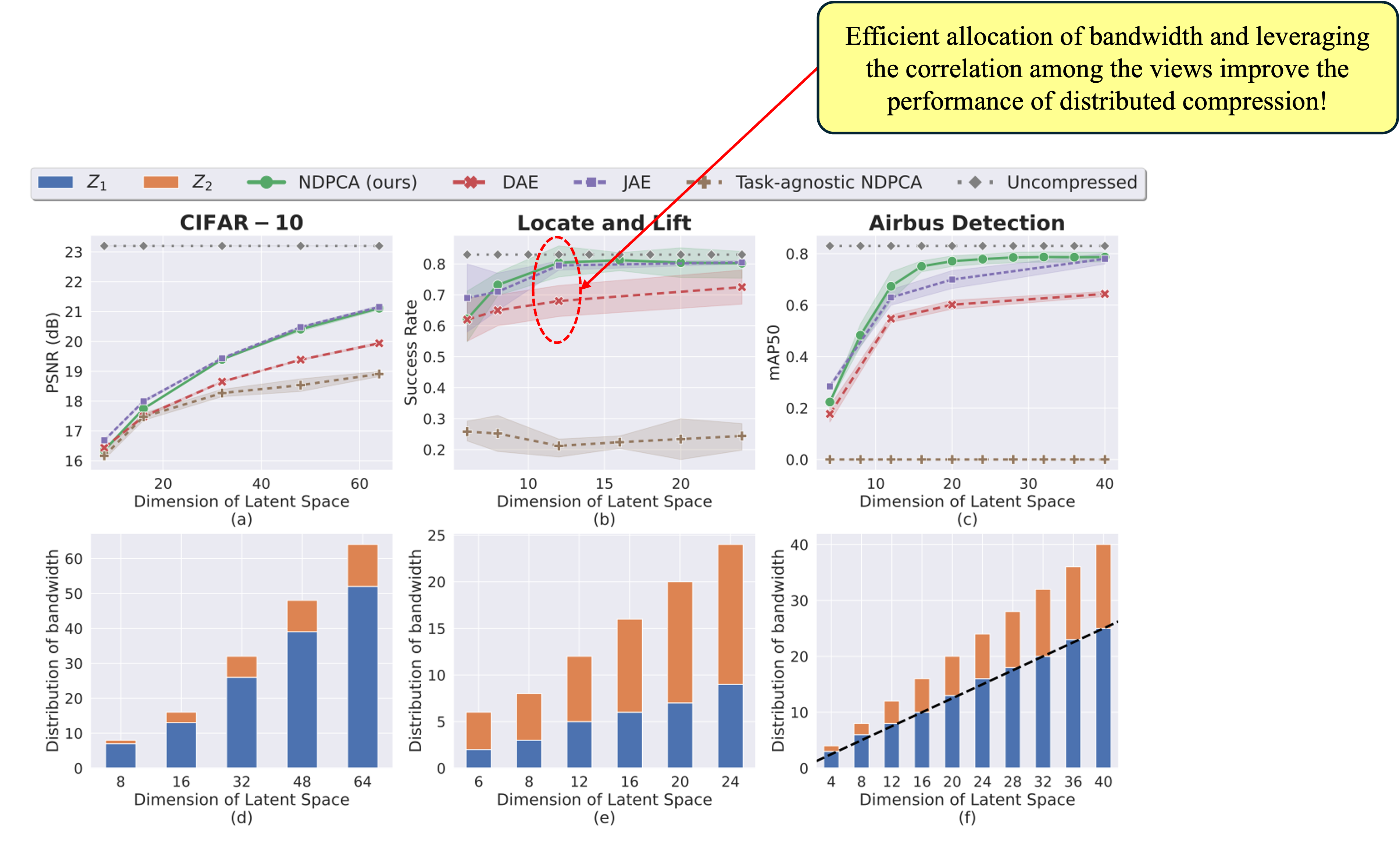

During the training phase, we introduce a PCA based low-rank projection mechanism into the compression pipeline to perform PCA over the neural representations, to learn the principal components corresponding to each view. By using these learned principal components, we compare the importance of each views and retain only the modes with the highest principal components among all the views. This simple yet effective techniques ensures prioritizing the transmission of mot important data first as well as prioritizes the protection of views with the most relevant information for the downstream task model.

Variable Rate Compression

In real-world scenarios, bandwidth availability can be unpredictable, with higher bandwidths typically yielding better task performance. Hence it is crucial to have the ability to quickly change the compression rate based on the available bandwidth. By leveraging the hierarchical nature of the DPCA module, we choose the number of principal components to preserve based on the bandwidth and easily adapt the compression rate dynamically. We highlight that despite the support for multiple rates, we need to train only a single model.

A Closer Look at NDPCA

Learning Low-Rank Task Representations

NDPCA harnesses the power of principal component analysis to learn low-rank task representations from the data. By focusing on the most essential features, the framework can efficiently compress the data without compromising task performance.

Distributed Compression

Each sensor in the network independently compresses its data using the learned low-rank task representations. This step ensures that only relevant information is transmitted, effectively reducing communication overhead between the sensors, which can be up to \(O(n^2)\).

Joint Decoding

At the central node, a joint decoder plays a pivotal role in reconstructing the compressed data from multiple sensors. This reconstructed data is then fed into the task model, ensuring that only the important task-relevant features are represented, ultimately leading to maintaining the task performance.

The Benefits and Significance

Adaptability to Varying Bandwidth

NDPCA can dynamically compress and transmit the most important features to match the available bandwidth, thereby maximizing the performance of the task model. This adaptability proves invaluable in real-world scenarios where bandwidth availability can fluctuate unpredictably.

Reduced Computing and Storage Overhead

By employing a single model and distributed compression, NDPCA significantly reduces the computational and storage requirements in comparison to traditional methods. This efficiency renders NDPCA viable even in resource-constrained environments.

Enhanced Task Performance

By compressing only the features that are pertinent to the task at hand, NDPCA ensures that the task model receives data of the highest quality. This, in turn, leads to improved overall performance and accuracy.

Results

Figure 3: Top: Performance Comparison for 3 different tasks. Our method achieves equal or higher performance than other methods. Bottom: Distribution of total available bandwidth (latent space) among the two views for NDPCA (ours). The unequal allocation highlights the difference in the importance of the views for a given task.

Conclusion

Our paper, titled “Task-aware Distributed Source Coding under Dynamic Bandwidth,” introduces NDPCA as a revolutionary solution to the challenges of data compression and communication in multi-sensor networks. By prioritizing the transmission of task-relevant features from multiple data sources and adapting to varying bandwidth conditions, NDPCA provides an efficient and flexible framework for optimizing data transmission. The significance of this work lies in its potential to enhance communication efficiency and improve task performance across a wide range of applications, from Internet of Things devices to large-scale sensor networks.

References

Task-aware Distributed Source Coding under Dynamic Bandwidth, Po-han Li, Sravan Kumar Ankireddy, Ruihan Zhao, Hossein Nourkhiz Mahjoub, Ehsan Moradi Pari, Ufuk Topcu, Sandeep P. Chinchali, Hyeji Kim, Advances in Neural Information Processing Systems (NeurIPS), 2023.